python网页解析 robots文件说明

有勇气的牛排

674

Python

2023-05-18 20:25:39

文章目录

1 robots.txt 文件

User-agent:是用来指定搜索引擎的,一般写 *。

Disallow:禁止抓取,不允许被收录。

Allow:允许抓取,可以被收录。

Sitemap:用来告诉搜索引擎抓取我们网站的网站地图Sitemap.xml文件的位置。

Crawl-delay:

User-agent: *

Disallow: /login

Disallow: /register

Allow: /index

Allow: /article/*

Sitemap: https://www.***.com/sitemap_index.xml

1 BeautifulSoup 解析的方式

xml: 速度快、唯一支持 XML 的解析器需要安装 C 语言库

lxml: 速度快、文档容错能力强需要安装 C 语言库

html.parse: Python 的内置标准库、执行速度适中 、文档容错能力强 Python 2.7.3 or 3.2.2) 前的版本中文容错能力差

html5lib: 最好的容错性、以浏览器的方式解析文档、生成 HTML5 格式的文档速度慢、不依赖外部扩展

1.1 lxml:

import requests

from bs4 import BeautifulSoup

url = 'https://www.115z.com/html/17556.html'

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Mobile Safari/537.36'

}

res = requests.get(url, headers=headers).content

soup = BeautifulSoup(res, 'lxml')

print(soup.body)

img = soup.select('.external')

print(img)

for i in img:

print(i['href'])

text = soup.find_all('p')

print(text)

for i in text:

print(i.text)

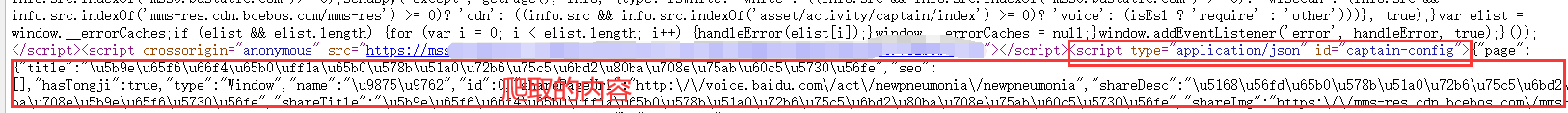

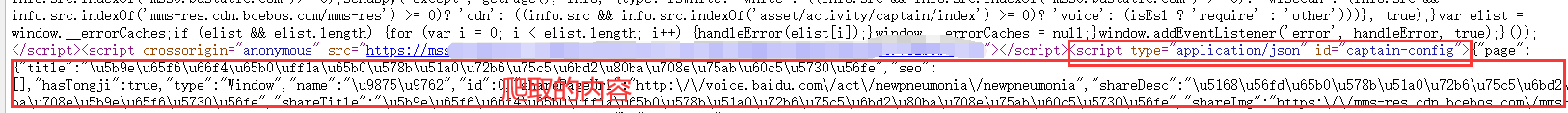

1.2 获取script里面的内容 通过etree

爬取的内容:

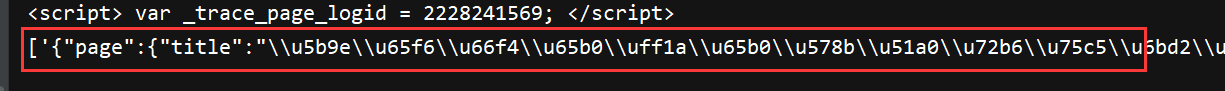

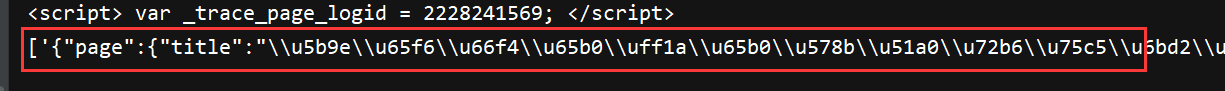

结果:

代码:

import requests

from lxml import etree

url = 'https://************************'

response = requests.get(url).text

html = etree.HTML(response)

result = html.xpath('//script[@type="application/json"]/text()')

print(result)

1.3 html.parser:

import requests

from bs4 import BeautifulSoup

url = 'https://www.115z.com/list/5.html'

headers = {

'user-agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Mobile Safari/537.36'

}

res = requests.get(url, headers=headers).content

print(res)

soup = BeautifulSoup(res, 'html.parser')

print(soup.title)

print(soup.body)

print(soup.title.parent.parent)

print(soup.title.name)

print(soup.title.parent.name)

print(soup.p)

print(soup.find_all('a'))

print(soup.find_all('a')[0])

for link in soup.find_all('a'):

print(link.get('href'))

print(soup.getText)

参考地址:

https://juejin.cn/post/6960837848522031112

<p><h3>文章目录</h3><ul><ul><li><a href="#1_robotstxt__2">1 robots.txt 文件</a></li><li><a href="#1_BeautifulSoup__19">1 BeautifulSoup 解析的方式</a></li><ul><li><a href="#11_lxml_27">1.1 lxml:</a></li><li><a href="#12_script_etree_56">1.2 获取script里面的内容 通过etree</a></li><li><a href="#13_htmlparser_74">1.3 html.parser:</a></li></ul></ul></ul></p>

<h2><a id="1_robotstxt__2"></a>1 robots.txt 文件</h2>

<p><code>User-agent</code>:是用来指定搜索引擎的,一般写 <code>*</code>。<br />

<code>Disallow</code>:禁止抓取,不允许被收录。<br />

<code>Allow</code>:允许抓取,可以被收录。<br />

<code>Sitemap</code>:用来告诉搜索引擎抓取我们网站的网站地图Sitemap.xml文件的位置。<br />

<code>Crawl-delay</code>:</p>

<pre><div class="hljs"><code class="lang-txt">User-agent: *

Disallow: /login

Disallow: /register

Allow: /index

Allow: /article/*

Sitemap: https://www.***.com/sitemap_index.xml

</code></div></pre>

<h2><a id="1_BeautifulSoup__19"></a>1 BeautifulSoup 解析的方式</h2>

<p><strong>xml:</strong> 速度快、唯一支持 XML 的解析器需要安装 C 语言库<br />

<strong>lxml:</strong> 速度快、文档容错能力强需要安装 C 语言库<br />

<strong>html.parse:</strong> Python 的内置标准库、执行速度适中 、文档容错能力强 Python 2.7.3 or 3.2.2) 前的版本中文容错能力差<br />

<strong>html5lib:</strong> 最好的容错性、以浏览器的方式解析文档、生成 HTML5 格式的文档速度慢、不依赖外部扩展</p>

<h3><a id="11_lxml_27"></a>1.1 lxml:</h3>

<pre><div class="hljs"><code class="lang-python"><span class="hljs-keyword">import</span> requests

<span class="hljs-keyword">from</span> bs4 <span class="hljs-keyword">import</span> BeautifulSoup

url = <span class="hljs-string">'https://www.115z.com/html/17556.html'</span>

headers = {

<span class="hljs-string">'User-Agent'</span>: <span class="hljs-string">'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Mobile Safari/537.36'</span>

}

res = requests.get(url, headers=headers).content

soup = BeautifulSoup(res, <span class="hljs-string">'lxml'</span>)

<span class="hljs-comment"># 获取body里面的内容</span>

<span class="hljs-built_in">print</span>(soup.body)

<span class="hljs-comment"># 获取 a标签里面的 href值</span>

img = soup.select(<span class="hljs-string">'.external'</span>)

<span class="hljs-built_in">print</span>(img)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> img:

<span class="hljs-built_in">print</span>(i[<span class="hljs-string">'href'</span>])

<span class="hljs-comment"># 获取所有p标签内容</span>

text = soup.find_all(<span class="hljs-string">'p'</span>)

<span class="hljs-built_in">print</span>(text)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> text:

<span class="hljs-built_in">print</span>(i.text)

</code></div></pre>

<h3><a id="12_script_etree_56"></a>1.2 获取script里面的内容 通过etree</h3>

<p><strong>爬取的内容:</strong><br />

<img src="https://img-blog.csdnimg.cn/20200506184103983.png" alt="在这里插入图片描述" /><br />

<strong>结果:</strong><br />

<img src="https://img-blog.csdnimg.cn/20200506184226954.png" alt="在这里插入图片描述" /><br />

<strong>代码:</strong></p>

<pre><div class="hljs"><code class="lang-python"><span class="hljs-keyword">import</span> requests

<span class="hljs-keyword">from</span> lxml <span class="hljs-keyword">import</span> etree

url = <span class="hljs-string">'https://************************'</span>

response = requests.get(url).text

html = etree.HTML(response)

<span class="hljs-comment"># 通过etree的xpath来锁定指定的script</span>

result = html.xpath(<span class="hljs-string">'//script[@type="application/json"]/text()'</span>)

<span class="hljs-built_in">print</span>(result)

</code></div></pre>

<h3><a id="13_htmlparser_74"></a>1.3 html.parser:</h3>

<pre><div class="hljs"><code class="lang-python"><span class="hljs-keyword">import</span> requests

<span class="hljs-keyword">from</span> bs4 <span class="hljs-keyword">import</span> BeautifulSoup

<span class="hljs-comment"># url = 'http://www.mianhuatang.cc/96/96827/'</span>

url = <span class="hljs-string">'https://www.115z.com/list/5.html'</span>

headers = {

<span class="hljs-string">'user-agent'</span>: <span class="hljs-string">'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Mobile Safari/537.36'</span>

}

res = requests.get(url, headers=headers).content

<span class="hljs-built_in">print</span>(res)

<span class="hljs-comment"># 初始化,实例化一个BeautifulSoup对象</span>

soup = BeautifulSoup(res, <span class="hljs-string">'html.parser'</span>)

<span class="hljs-comment"># 标题</span>

<span class="hljs-built_in">print</span>(soup.title)

<span class="hljs-comment"># 读取body</span>

<span class="hljs-built_in">print</span>(soup.body)

<span class="hljs-comment"># print(soup.title.parent)</span>

<span class="hljs-built_in">print</span>(soup.title.parent.parent)

<span class="hljs-built_in">print</span>(soup.title.name)

<span class="hljs-comment"># 输出: title</span>

<span class="hljs-built_in">print</span>(soup.title.parent.name)

<span class="hljs-comment"># 输出: head</span>

<span class="hljs-comment"># 输出 p 标签:好像是随机的</span>

<span class="hljs-built_in">print</span>(soup.p)

<span class="hljs-comment"># 输出: <p>Copyright © XXXXXXXX</p></span>

<span class="hljs-comment"># 查询标签 输出字典</span>

<span class="hljs-built_in">print</span>(soup.find_all(<span class="hljs-string">'a'</span>))

<span class="hljs-built_in">print</span>(soup.find_all(<span class="hljs-string">'a'</span>)[<span class="hljs-number">0</span>])

<span class="hljs-comment"># 查询所有a标签里面的 href 值</span>

<span class="hljs-keyword">for</span> link <span class="hljs-keyword">in</span> soup.find_all(<span class="hljs-string">'a'</span>):

<span class="hljs-built_in">print</span>(link.get(<span class="hljs-string">'href'</span>))

<span class="hljs-comment"># # 获取整个网页</span>

<span class="hljs-built_in">print</span>(soup.getText)

</code></div></pre>

<p>参考地址:<br />

https://juejin.cn/post/6960837848522031112</p>

评论区